More robots are expected in the not too distant future and this includes service robots sharing the same environment with humans. My personal prediction is that they are more likely to appear in workplace environments, such as offices, before they enter our homes; the coming generations of such robots will be expensive and, unfortunately, unable to do many of the things in our homes that we wish that they should do – such as washing clothes, cleaning the kitchen and perhaps cooking food for us.

When they do appear, they are likely to be – at least to some degree – autonomous. This aspect has created much concern among those who think that autonomous entities can make decisions that are harmful to humans. Such decisions have been discussed, for example, when it comes to self-driving cars, weapon systems and credit evaluations. And there are indeed many suggestions about how to restrict the potential of robot transgressions. It is almost a field of research on its own, in which researchers discuss concepts such as artificial morality, machine morality and roboethics. Some say that robots should be programmed so that there are things that they never would do; others, who are more optimistic about what is possible, have suggested that robots should be developed so that they have morality – that is to say, the ability to distinguish between what is right and what is wrong and an ability to choose what is right.

As I see it, however, the main transgressors will still be humans. In our lives, there are so many norms to know, they are sometimes conflicting, subject to debate, and they often serve as barriers in relation to our needs and wants, so that it is simply inevitable that all of us, every now and then, will be transgressors. This, in turns, raises an additional but less well-researched issue about robot morality and transgressions: what happens when we, the humans, are the transgressors and when the robots understand that what we do is wrong? Would this reduce the number of transgressions and thereby make the world a better place? Or would it add to the feeling that we are often monitored? That is to say, robots with artificial morality that watch over us may create a deeply disturbing Orwellian environment. It may be noted that several major corporation do engage in electronic surveillance of employees by other means than robots and that this typically has a strong negative charge for employees.

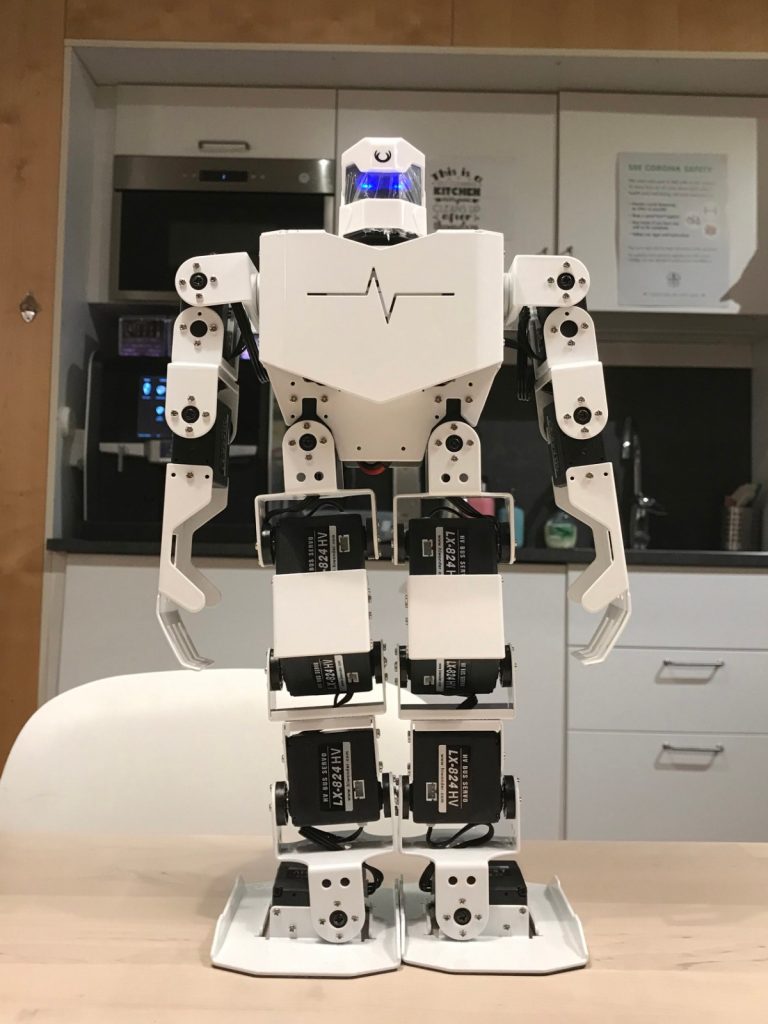

Anyway, I had a chance to examine this issue experimentally by having an employee in an office conduct norm violations while a service robot was watching. I used my own robot, which has 16 degrees of freedom, meaning that it can do amazing things with its body, and it can be made to appear as highly autonomous by the way it talks – in human language – to humans. In one experiment, the robot discovered that the employee was not doing what he said he was doing with his laptop; he said that he was preparing a presentation of a sales strategy – instead, however, he was watching porn online. And in the other experiment, the employee, who was about to take an apple in the office kitchen, coughed heavily without covering his mouth so that all the fruits in a bowl got a full shower of potentially contagious material (you can see what it looked like here).

Since these were experiments, the robot was manipulated so that it either did not understand that the employee was violating norms or it did understand this, which it indicated by condemning the human for the wrongdoings. Then, the robot-employee interactions were shown to participants whose task it was to answer questions about the robot. In a typical experiment of this type, the participants see only one of the manipulated versions and the researcher’s indication of an effect comes from comparing the responses from those who saw different versions and this is how my robot experiments were made, too. In any event, the result was unambiguous: when the robot indicated that it had understood that the human violated a norm, the robot was more positively evaluated and was perceived as delivering a higher level of service quality.

This is perhaps not so surprising, because to build a robot that understands that a violation of human norms has occurred is quite a remarkable engineering and programming feat. Yet some part of me had expected that such an understanding is eerie, something that can potentially result in the unwelcome omnipresence of electronic besserwissers eager to be judges of what we do, and that this would have attenuated the impressions of the norm-savvy robot. But such sentiments did not materialize in my experiments. Presumably, then, the results reflect a very human aspect: we typically react very negatively to norm violations, also in cases in which we are not personally victimized, and this ability facilitates stable relationships with others as well as all those cooperation activities that made Homo sapiens special.

Professor of Marketing and Head of the Center for Consumer Marketing (CCM), Stockholm School of Economics

Senior Fellow Researcher at CERS, Hanken